What is a VPC?#

Virtual Private Cloud

An isolated virtual network present in your AWS account. There is a default VPC present. However, it is recommended to create your own custom VPC based on your environment.

You can create a IPv4 only or Dual stack (IPv4 + IPv6) VPC.

Default VPC#

You can modify, delete, recreate a default VPC

Creating a default VPC does not need VPC specific permissions as all resources are created by AWS

Custom#

A custom VPC can not be marked as default even if there is no default VPC in the region.

VPC CIDR Blocks#

When creating a VPC you must specify an IPv4 CIDR range. This becomes the VPC’s primary CIDR range. All private ranges defined in RFC#1918 are valid to be used -

10.0/8172.16/12192.168/16100.64/10 (CGNAT)

The largest and smallest VPC CIDR ranges that can be specified are /16 and /28 respectively. Yes, you cannot create a /8 VPC, and the smallest VPC /28 only has 14 usable IPs.

Subnet mask for prefix /28 = 255.255.255.240

Total IPs = 10.0.0.0 to 10.0.0.15 (16 IPs). Usable IPs = 10.0.0.1 to 10.0.0.14 (14 IPs)

DANGER

Avoid using the Docker default bridge range 172.17.0.0/16 as a CIDR range for a VPC, since it may cause conflicts with some AWS services that use the same range like SageMaker.

Constraints#

You can assign a secondary CIDR range to a VPC with some constraints as follows

| IP range | Restricted | Allowed |

|---|

10.0/8 | 198.19/16172.16/12192.168/16 | Non-overlapping range from 10.0/8100.64/10Any other public range |

172.16/12 | 198.19/1610.0/8192.168/16 | Non-overlapping range from 172.16/12100.64/10Any other public range |

192.168/16 | 198.19/1610.0/8172.16/12 | Non-overlapping range from 192.168/16100.64/10Any other public range |

198.19/16 | 10.0/8172.16/12192.168/16 | 100.64/10Any other public range |

Any other public range, or 100.64/10 | 198.19/1610.0/8172.16/12192.168/16 | Non-overlapping range from 100.64/10Any other public range |

Subnet CIDR Blocks#

Subnet CIDR block can be the same as VPC CIDR block - 1 subnet in a VPC. Or, it can be a subset of the VPC CIDR block to create multiple subnets in a VPC.

Subnet CIDR blocks cannot overlap each other.

Similar to VPC CIDR block, the largest, and smallest CIDR block for subnets are /16 (~65 IPs ) and /28 (~14 IPs).

AWS reserves 5 IPs in each subnet block. For example, in a subnet with CIDR block 10.0.0.0/24, following IPs are reserved by AWS

10.0.0.0 Network Address10.0.0.1 VPC Router10.0.0.2 DNS server, usually, network range + 2 route5310.0.0.3 Future use10.0.0.255 Network broadcast address. No broadcasr support in VPC, so reserved.

Prefix Lists#

A collection of CIDR block entries (and an optional description).

Properties#

- Supports both IPv4, IPv6, but these cannot be mixed in the same list.

- Region specific

- Needs a maximum entry count while creation. Can be expanded later.

- Resources referencing them always use the latest version

- Can be shared with other account using AWS Resource Access Manager

- Weight of an AWS managed prefix list is the number of entries it takes up in a resource. For ex - cloudfront prefix list weight is 55, so it takes up 55 entries in a security group.

Types#

AWS Managed#

AWS service CIDR blocks, you can not edit, share, delete, add these.

Customer managed#

Useful for sharing commonly used CIDR blocks in a customer’s environment.

Security Groups#

Endpoints#

VPC endpoints are virtual devices enabling connectivity for compute or services within a VPC.

Types#

Interface#

Collection of managed ENIs with private IP to access AWS services (except dynamodb, s3), custom services, services from marketplace privately.

Gateway Load Balancer#

Route traffic to a fleet of virtual appliances using private IPs.

Gateway#

Targets specific IP routes Prefix Lists for traffic destined for AWS DynamoDB and AWS S3

Ingress Routing#

Here, the custom route table attached to the [[internet-gateway|AWS Internet Gateway]] forwards all incoming traffic to the network interface (eni0) of an EC2 instance. This instance could be running a security appliance like network-firewall to inspect the traffic.

Similarly, the route table for application subnet has a route which forwards all internet bound traffic to the instance’s network interface. Note: The appliance doesn’t do any NAT, so the internet communication is based on the public IP of the workload instance.

How to distribute workloads in VPCs?#

There are multiple ways to distribute workloads across different VPCs. Each approach has it’s pros and cons, so they should be evaluated based on the customer’s specific requirements.

- Based on Environment (dev, prod, shared services).

- Based on Compliance needs like public facing services, internal only, PCI compliant resources etc.

- Based on data sovernity restrictions (US, EU etc.) - across different regions.

- Based on company structure, these can be seggregated further into their own specific accounts for each company division and so on.

VPC to VPC Connectivity#

As the number of VPCs increase in a customer’s environment, the next obvious question that arises is, how to maintain connectivity between different VPCs, between VPCs and on-prem systems etc.

There are 2 broad approaches to establishing such connections. They are -

- Point to point - Traffic flows between specific VPCs.

- Hub and spoke - Traffic flows via a central resource based on certain defined rules.

VPC Peering#

Point to point connectivity model.

This is a point to point connectivity method in which 2 VPCs are connected directly. It allows bidirectional traffic to flow between them. It does not support transitive connectivity.

VPC A peered with VPC B, and VPC B peered with VPC C. Traffic from VPC A cannot reach VPC C via B. To enable connectivity between A & C, a new peering connection must be estabished.

Maximum limit of 125 peering connections per VPC, so this does not scale very well.

Lowest cost for connecting VPCs since the charges are only for the traffic passing through the connection, there is no standing cost.

When using a VPN, or [[direct-connect|Direct Connect]], each VPC must be connected individually to the DC or VPN. Peering does not support transitive routing, remember?

Works cross-region, cross-account.

Best suited for VPCs less than 10.

Traffic isolation can be achieved using security groups on specific resources (or NACLs as well?).

A hub-and-spoke model using Transit Gateway. Cost implications.

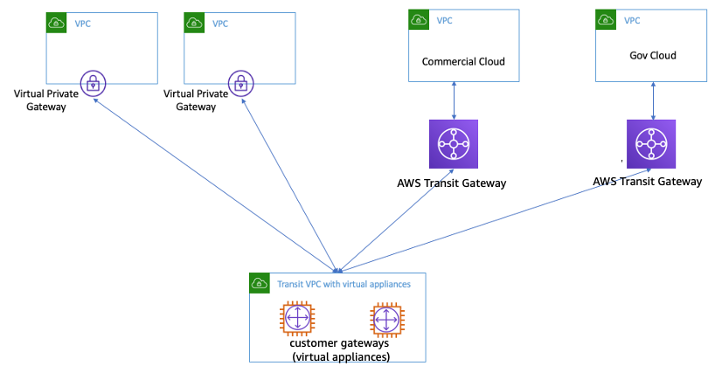

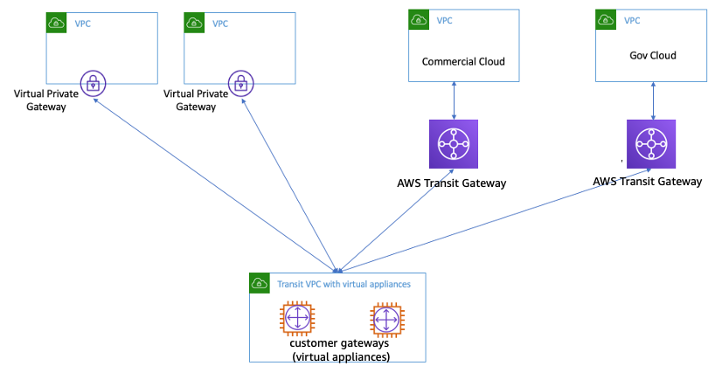

Transit VPC#

A hub-and-spoke model.

In this solution, a central VPC with customer managed software appliances is used to route traffic between various connected networks. All VPCs can connect to this central VPC to gain access to the connected network. This is similar to Transit Gateway solution but is managed by customer.

Transitive connectivity is provided by a VPN overlay network using BGP IPSec.

The central VPC contains EC2 instances running software appliances that route the traffic to their destination using the VPN overlay network.

Customers can use the same L7 Firewall, IDP, IPS products for their on-prem/cloud workloads if using a vendor provided product as the software appliance.

Not very high performance - limited throughput of 1.2 Gbps/VPN tunnel.

It brings in additional complexity, cost, administrative and performance challenges.

[[private-link|AWS Private Link]]#

Access AWS services, 3rd party SAAS apps directly in your VPC without traversing the internet.

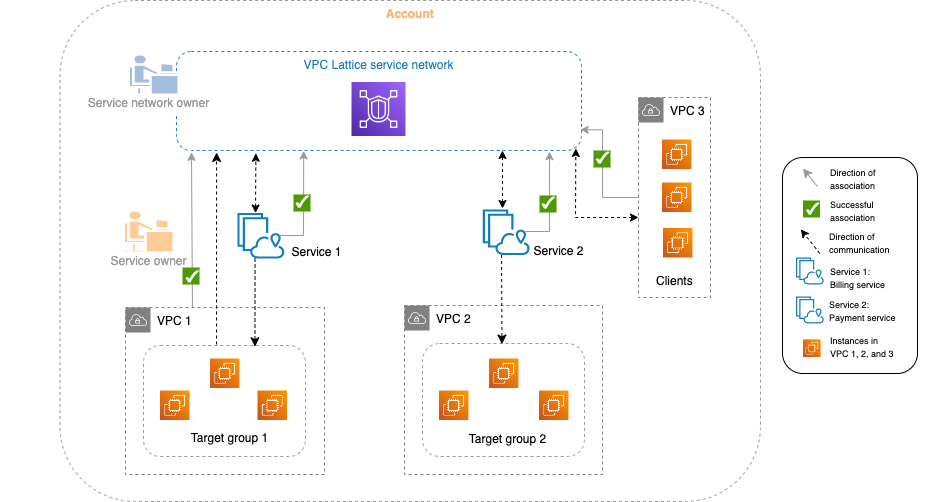

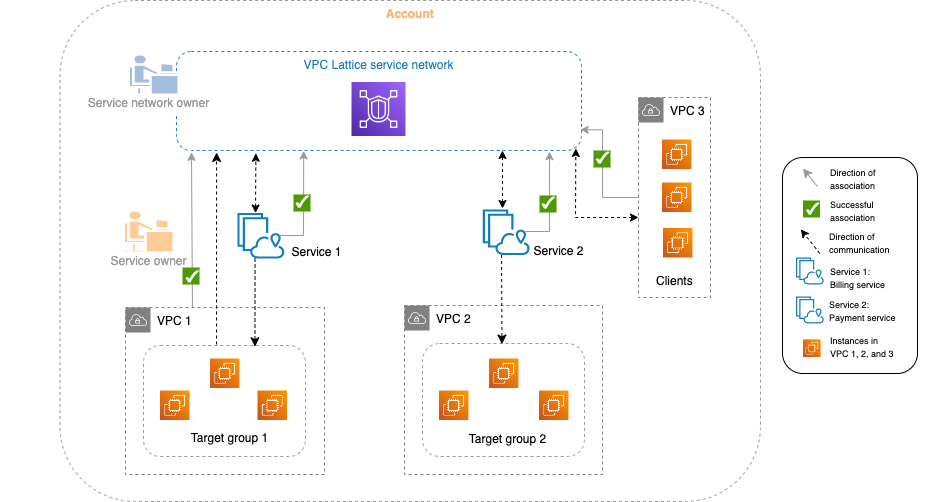

VPC Lattice#

Abstracts away the network complexity for application developers.

It creates logical application network layer called service network which allows service to service communication across VPC, regions, accounts over HTTP/HTTPS, or gRPC. It operates on a data plane in the VPC using the link local address layer 169.254/16 RFC#3927. This data plane is exposed via an endpoint within the VPC. Once a service network is associated with a VPC, services deployed within the VPC can discover other services made available by the service network.

QUESTION

In the above diagram, while VPC1, 3 are associated with the service network, VPC2 isn’t. So what’s the impact of this?

All 3 services are associated with the service network meaning they are discoverable by resources running in VPCs associted with the service network i.e. resources in VPC1, 3. But, they aren’t discoverable from VPC2. This means the service in VPC2 doesn’t really depend on any other service and just responds to requests received.

Service#

An independent software unit that performs a specific function. While configuring a service with a service network, you use similar resources like a load balancer - listners, routing rules and target groups.

Service directory#

Central directory (at account level) of registered services, either deployed in the same account or shared via AWS Resource Access Manager.

Service network#

Logical grouping of services to enable connectivity and apply common policies

Auth Policy#

Resource policy to ensure only authenticated and authorized clients use the services

VPC Sharing#

VPC and/or subnets can be shared across accounts in an Org using AWS Resource Access Manager.

This is a low cost, low operational overhead solution, however, quite permissive in terms to network isolation.

Network isolation can be improved by using grouping apps by behaviour and using separate subnets with relevant NACL for each group. Further isolation can be achieved by using security groups.

Private NAT Gateway#

Connect networks with overlapping CIDR blocks.

INFO

Here, the 2 VPCs have non-routable overlapping range of 100.64/16. Based on secondary CIDR [[#Constraints| constraints, select a non-overlapping routable CIDR block for both VPC.

VPC A = 10.0.1.0/24, VPC B = 10.0.2.0/24

Now, subnets are created within this secondary range in both VPCs - 10.0.1.0/25, and 10.0.2.0/25. These new subnets are used to launch private NAT gateways which will do the address translation. The new subnets are attached to the transit gateway with appropriate routes defined to refer to each other. In the non-routable sunets, routes are updated to send all non-local requests to the NAT.

- As per the VPC A non-routable subnet route table, the packet with destination of ALB IP (10.0.2.x) is headed to the private NAT in VPC A routable subnet.

- Private NAT does the network translation, and forwards the packet to the TGW attachement as per the VPC A routable subnet route table.

- As per the TGW route table, the packet is then forwarded to the ALB in VPC B routable subnets.

- The ALB then forwards the packet to the destination in VPC B non-routable subnet.

Response follows a similar path back - 5, 6, 7, 8.

Reference

VPC peering vs. Transit VPC vs. Transit Gateway#

Comparison of VPC peering, Transit VPC, and Transit Gateway

| Criteria | VPC peering | Transit VPC | Transit Gateway |

|---|

| Architecture | Full mesh | VPN-based hub-and-spoke | Attachments-based hub-and-spoke. Can be peered with other TGWs. |

| Complexity | Increases with VPC count | Customer needs to maintain EC2 instance/HA | AWS-managed service; increases with Transit Gateway count |

| Scale | 125 active Peers/VPC | Depends on virtual router/EC2 | 5000 attachments per Region |

| Segmentation | Security groups | Customer managed | Transit Gateway route tables |

| Latency | Lowest | Extra, due to VPN encryption overhead | Additional Transit Gateway hop |

| Bandwidth limit | No limit | Subject to EC2 instance bandwidth limits based on size/family | Up to 50 Gbps (burst)/attachment |

| Visibility | VPC Flow Logs | VPC Flow Logs and CloudWatch Metrics | Transit Gateway Network Manager, VPC Flow Logs, CloudWatch Metrics |

| Security group cross-referencing | Supported | Not supported | Not supported |

| Cost | Data transfer | EC2 hourly cost, VPN tunnels cost and data transfer | Hourly per attachment, data processing, and data transfer |

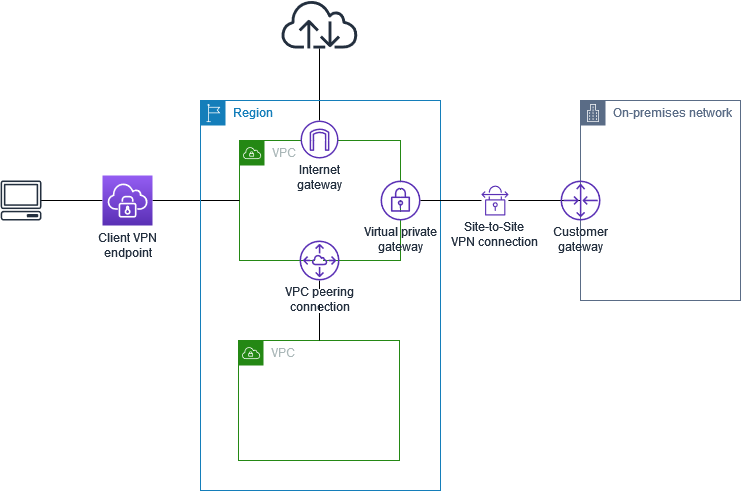

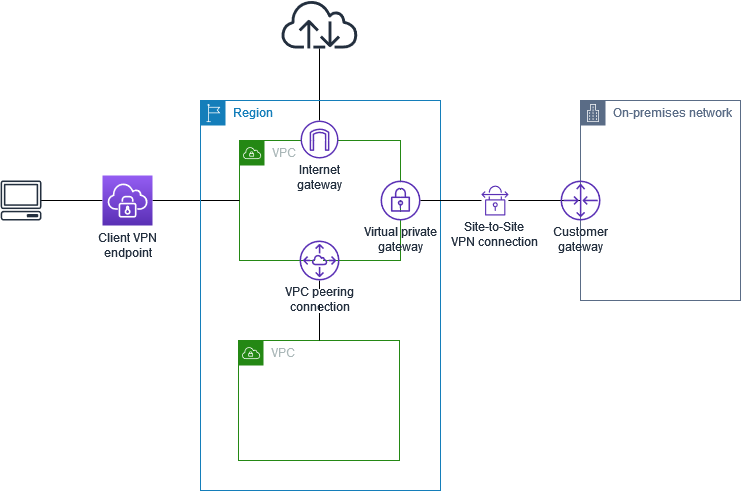

Hybrid Connectivity#

There are 2 approaches to establish hybrid connectivity. Both utilize VPN, but differ in where and how the VPN connection terminates.

One to One#

In this case, each VPC is connected to the VPN individually using Direct Connect/Virtual Private Gateway.

Edge Consolidation#

Connect the VPN to a central transit-gateway and then attach VPCs to this central Transit Gateway.

Once the approach is decided, there are 4 different ways to terminate VPN connection on AWS

Transit Gateway#

Recommended option, to connect VPN to a central Transit Gateway.

Cost = AWS Transit Gateway Pricing + AWS VPN pricing.

EC2#

Some customers might want to use 3rd party software products, so terminating the VPN on an EC2 instance is also an option. This is basically one to one connectivity.

To enable Edge consolidation, you can use the [[#Transit VPC]] pattern. However, the same drawbacks as highlighted in that section remain.

This is also an option when wanting to use GRE.

Virtual Private Gateway VGW#

Great option when starting out, since this is a [[#One to One]] connectivity design where you need to configure this on a per VPC basis.

Offers redundancy due to 2 tunnels/connection design.

Does not support ECMP for VPN connections so throughput is limited to 1.25 Gbps per tunnel.

Cost = AWS VPN pricing only, no charge for Virtual Private Gateway

Question

How are Virtual Private Gateway and Customer Gateways related?

on-prem ------ aws customer ------ aws virtual

device ------ gateway ------ private gw ---- aws vpc

|<--------- site to site VPN connection --------->

Question

How are Virtual Private Gateway and Direct Connect related?

on-prem ---- direct ---- virtual --- aws virtual

device ---- connect ---- interface --- private gw --- aws vpc

Client VPN Endpoint#

Another option when starting out. This is a One to One connectivity design where you need to configure this on a per VPC basis, or make use of other VPC connectivity features to achieve an Edge Consolidation connectivity design.

To configure client VPN endpoint, you need -

- Target Network - subnets in a VPC, 1 per AZ.

- Additional routes - local, to peered VPC or VPGW etc.

- Authentication Mechanism

- SAML 2.0 (Single IdP only)

- Mutual authentication, cert based - each client needs a new cert

- Active Directory

- Client CIDR block between

/12 and /22 prefix/subnet mask. - Server certificate in [[AWS Certificate Manager]] regardless of authentication mechanism selected

- Enable self service portal (optional)

- Enable split tunnel (optional)

Once configured, download the client VPN configuration file, and use it to connect to the VPN endpoint. This file contains info like the DNS name of the VPN endpoint, authorization mechanism, client CIDR blocks to use etc.

Authorization#

Security groups (attached to the client VPN endpoint) and network based authorization rules using either AD attributes or IdP provided metadat like groups etc.

Split Tunnel#

Use this to send specific traffic to the AWS tunnel. By default, the client endpoint route adds a 0.0.0.0 route to the client which sends all traffic from the client machine to the AWS tunnel. This can increase data transfer costs if everything goes via AWS.

Split tunnel adds specific routes to the client device route table which ensures only the traffic destined for AWS is sent over the tunnel. When using split tunnel ensure to not have a 0.0.0.0 route in the client VPN, otherwise it will still send traffic not destined for AWS to the tunnel.

Connect On-prem network to AWS backbone network.

Limits#

250 network interfaces per VPC.

References#