K8s Scheduling incubating

Which pod goes where?

How does it help?

- Opportunities for cost saving by utilizing better scheduling. snorkel ai case-study

- Restrict workloads to specific nodes. Ex - run gpu workloads on gpu nodes, or don’t run cpu-only workloads on gpu nodes.

Some users want to put multiple pods that communicate with one another in the same zone to avoid inter-zone traffic charges [[aws-well-architected#AZ Affinity]]

Anti-affinity is useful to spread pods across failure domains/topology (AZ or Region)

What is a Scheduler?

Summary

| Scheduling | Depends On | Reference |

|---|---|---|

| nodeName | node name | |

| nodeAffinity | node label | nodeaffinity |

| nodeAntiAffinity | node label | |

| podAffinity | pod label, node label (topology key) | podaffinity |

| podAntiAffinity | pod label, node label (topology key) |

There are a few options to control the scheduling of pods on specific nodes described below, starting with the simplest one.

Node Name

- Schedule a pod to a specific node, no questions asked. Scheduler assumes resource requirements are met.

- If NO node with the specified nodeName exists, the pod is NOT scheduled, might even be deleted

- If the named node does not have enough resources, pod fails with errors like

OutOfMemory - In cloud environments,

nodeNameis likely to be dynamic and not fixed - Useful for

- Custom [[#What is a Scheduler?|scheduler]] or

- If you need to bypass any configured schedulers

Property

spec:

nodeName: kube01

Node Selector

- Simplest way to control the scheduling of a pod.

- Add property

spec/nodeSelectorto the pod configuration, and specify an existing node label - Can also be used to restrict use of nodes, so new workloads are not deployed to it

kubeletcan apply labels to nodes- Be careful when using labels to restrict nodes, the node’s

kubeletshouldn’t be able to apply/update the label on itself - Use node restriction plugin to control this node restriction better, uses label

node-restriction.kubernetes.io/

Property

spec:

nodeSelector:

matchExpressions:

- key: string

operator: In, NotIn, Exist, DoesNotExist, Gt, Lt

values: [string] # array is replaced during strategic merge

matchFields:

- key: string

operator: In, NotIn, Exist, DoesNotExist, Gt, Lt

values: [string] # array is replaced during strategic merge

matchExpressions or matchFields statements are treated as AND statements

kubectl explain pod.spec.affinity.nodeAffinity

KIND: Pod

VERSION: v1

FIELD: nodeAffinity <NodeAffinity>

DESCRIPTION:

Describes node affinity scheduling rules for the pod.

Node affinity is a group of node affinity scheduling rules.

FIELDS:

preferredDuringSchedulingIgnoredDuringExecution <[]PreferredSchedulingTerm>

The scheduler will prefer to schedule pods to nodes that satisfy the

affinity expressions specified by this field, but it may choose a node that

violates one or more of the expressions. The node that is most preferred is

the one with the greatest sum of weights, i.e. for each node that meets all

of the scheduling requirements (resource request, requiredDuringScheduling

affinity expressions, etc.), compute a sum by iterating through the elements

of this field and adding "weight" to the sum if the node matches the

corresponding matchExpressions; the node(s) with the highest sum are the

most preferred.

requiredDuringSchedulingIgnoredDuringExecution <NodeSelector>

If the affinity requirements specified by this field are not met at

scheduling time, the pod will not be scheduled onto the node. If the

affinity requirements specified by this field cease to be met at some point

during pod execution (e.g. due to an update), the system may or may not try

to eventually evict the pod from its node.

#todo test this AND/OR condition

Example

#todo test if this actually works :D

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

env: test

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

nodeSelector:

matchFields:

- key: disktype

operator: In

values: [ssd]

Node Affinity

Use node labels to schedule pods on preferred nodes.

Property

kubernetes-api/v1.24/#affinity-v1-core

spec:

affinity:

nodeAffinity:

podAffinity:

podAntiAffinity:

RDS-IDE

^^ Easier to remember version of requiredDuringSchedulingIgnoredDuringExecution. Type of [[#Node Affinity]].

Label specified under this property must be present on the node during scheduling. If it’s subsequently removed, the pod still continues to run.

Scheduler will try to find a node that meets the expression. If no matching node is found, pod is NOT scheduled on any other node.

If the expression turns to false while the pod is running, it is still allowed to complete the execution.

Property

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- nodeSelectorTerm1 # same as spec.nodeSelector

- nodeSelectorTerm2

Multiple nodeSelectorTerm can be specified, they are treated as OR statements

PDS-IDE

^^ Easier to remember version of preferredDuringSchedulingIgnoredDuringExecution. Type of [[#Node Affinity]]

[[#What is a Scheduler?|Scheduler]] will try to find a node that meets the expression, and has maximum aggregate weight. If no matching node is found, it still schedules the pod on a node.

If the expression turns to false while the pod is running, it is still allowed to complete the execution.

Property

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 0-100

preference: nodeSelectorTerm # same as spec.nodeSelector

Node Anti-Affinity

There is NO dedicated configuration field for this. Node anti-affinity is achieved by inverting the node affinity by using negation operators like NotIn, DoesNotExist in the specified nodeSelectorTerm

Pod Affinity

Use running pod labels to schedule pods on preferred nodes. Like, deploy mysql pods on which ever nodes has postgresql pods running.

This is non-symmetric - no need to check if existing pods have specified any pod affinity before scheduling pods next to them that do specify a pod affinity

algorithm used by podaffinity

Property

kubernetes-api/v1.24/#affinity-v1-core

spec:

affinity:

nodeAffinity:

podAffinity:

podAntiAffinity:

#todo What’s the difference between pod affinity and node affinity? #todo What happens if all pods are deployed with a ‘hard’ pod affinity, RDS-IDE?

RDS-IDE

^^ Easier to remember version of requiredDuringSchedulingIgnoredDuringExecution. Type of [[#Pod Affinity]].

Label specified under this property must be present during scheduling. If it’s subsequently removed, the pod still continues to run.

[[#What is a Scheduler?|Scheduler]] will try to find a node that meets the expression. If NO matching node is found, pod is NOT scheduled on any other node.

If the expression turns to false while the pod is running, it is still allowed to complete the execution.

Property

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector: # label query over pods

matchExpressions:

- key: string

operator: In, NotIn, Exist, DoesNotExist, Gt, Lt

values: [string] # array is replaced during strategic merge

matchLabels:

key: value

namespaces: string

namespaceSelector:

matchExpressions:

- key: string

operator: In, NotIn, Exist, DoesNotExist, Gt, Lt

values: [string] # array is replaced during strategic merge

matchLabels:

key: value

topologyKey: string

Multiple nodeSelectorTerm can be specified, they are treated as OR statements

topologyKey is the key of a node label. It is used to select nodes belonging to a particular topology domain. For ex - Only launch pods in a specific AZ for access to a required volume. Or, only launch pods in a specific region.

PDS-IDE

^^ Easier to remember version of preferredDuringSchedulingIgnoredDuringExecution. Type of [[#Pod Affinity]].

[[#What is a Scheduler?|Scheduler]] will try to find a node that meets the expression, and has maximum aggregate weight. If NO matching node is found, it still schedules the pod on a node.

If the expression turns to false while the pod is running, it is still allowed to complete the execution.

Property

spec:

affinity:

podAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 0-100

podAffinityTerm:

labelSelector: # label query over pods

matchExpressions:

- key: string

operator: In, NotIn, Exist, DoesNotExist, Gt, Lt

values: [string] # array is replaced during strategic merge

matchLabels:

key: value

namespaces: string

namespaceSelector:

matchExpressions:

- key: string

operator: In, NotIn, Exist, DoesNotExist, Gt, Lt

values: [string] # array is replaced during strategic merge

matchLabels:

key: value

topologyKey: string

Warning!

labelSelector: null can cause NO pods to match the expression, and make a pod unschedulable!

Pod Anti-Affinity

There is no dedicated configuration field for this. Pod anti-affinity is achieved by inverting the pod affinity by using negation operators like NotIn, DoesNotExist in the specified podAffinityTerm

This is symmetric - even if a pod doesn’t specify an anti-affinity rule, it is still checked so as not to violate the anti-affinity rule specified by an already running pod.

Taints and Tolerations

Autoscaling

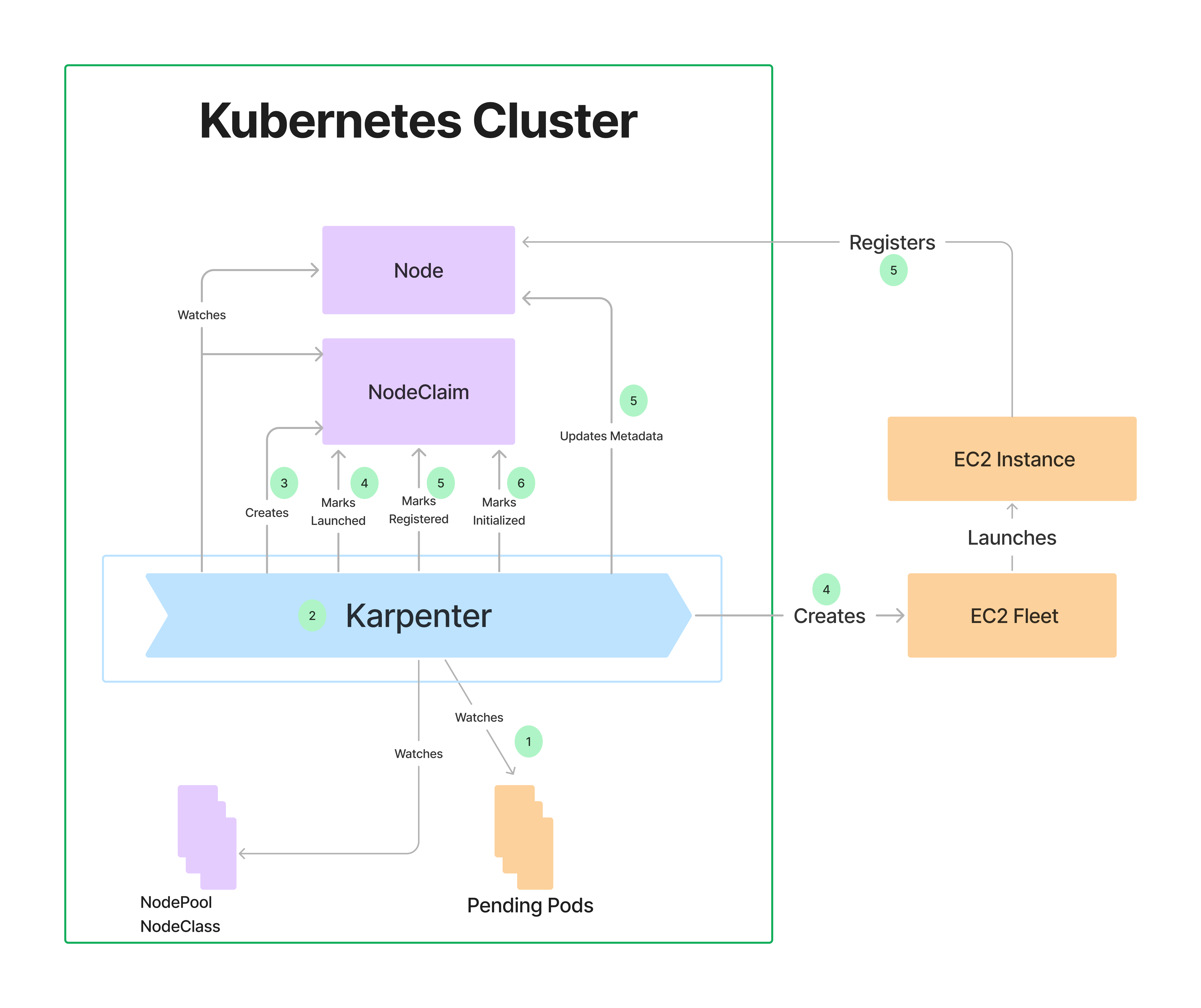

Karpenter

- Karpenter consolidation policies - “whenempty”

- Pod Disruption Budgets

- Karpenter can switch instance types to rightsize the nodes if pods aren’t using the node’s resources - depends on consolidation policies

- Karpenter AZ awarness - How does it work with EBS across different AZ? aws blogs/volume topolog -awareness

- Karpenter can sometimes overestimate node sizes (daemonset overheads) - karpenter/issues/715